Why vision is the future for mobile robotics

We say vision is the future of mobile robotics, but why? It’s because we’ve found that vision combined with inertial sensing and AI sensor fusion uniquely enables mobile robots to do what people do every day: autonomously sense their surroundings as they move through them, map them out intelligently, and localize themselves within that map. With Alphasense Position we are bringing vision and all its advantages to the market.

Request A Meeting

Please fill out your details below. Our team will reach out to you by email to schedule a date and time.

How mobile robotics can fulfill its potential with Visual SLAM

Mobile robots are widely used today in industrial automation. But look more closely at the types of applications they are deployed in and you’ll recognize a common theme: Whether they deliver supplies upstream of a production line, handle finished goods further downstream, or cart palettes around warehouses, today’s mobile robots thrive in environments that are structured, flat, static, and often signposted with reflectors or other markings.

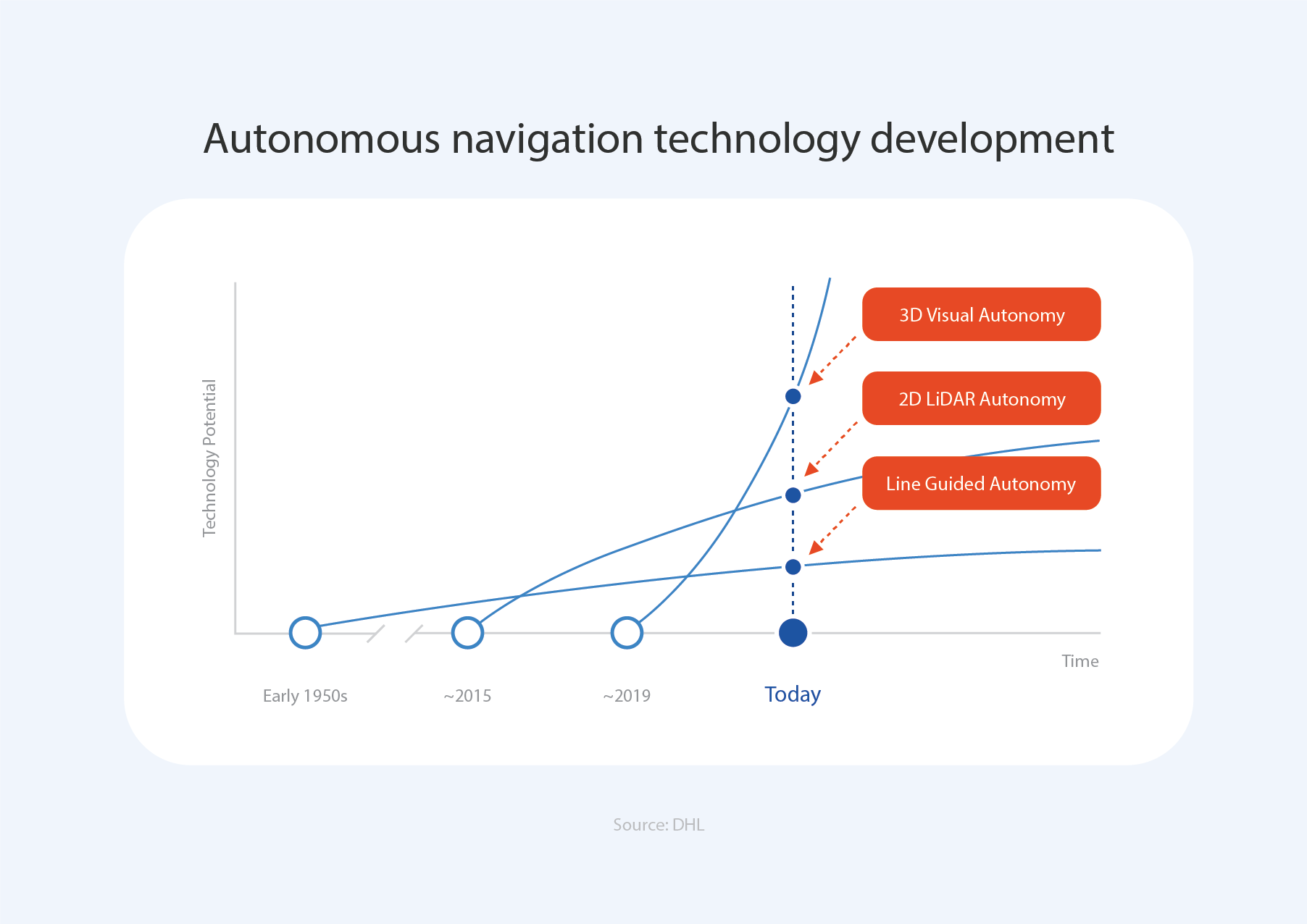

When planning a mobile robotics deployment with today’s leading positioning technologies, it’s impossible to avoid the tug of war between the four competing requirements of accuracy, the ability to handle dynamic environments, flexibility in modifying the area of operation, and setup and maintenance costs. Line following, 2D and 3D lidar, and laser interpolation, for instance, all meet two or three of the criteria, but not all four.

For mobile robotics to fulfill its potential, it needs a positioning solution that lets it grow out of the niche it occupies today. Easy to set up, accurate, flexible, and able to deal with highly dynamic environments both indoors and out. A solution that can be easily deployed, with no expert know-how, and no additional infrastructure.

When we say that vision is the future for mobile robotics, it’s because we’ve found that vision combined with inertial sensing and AI sensor fusion uniquely enables mobile robots to do what people do every day: autonomously sense their surroundings as they move through them, map them out intelligently, and localize themselves within that map.

According to the DHL Trend Radar, 3D visual autonomy technology is currently maturing, and will exponentially increase its potential within the next 5 years.

The benefits of Sevensense Visual SLAM

Our 3D visual SLAM technology, developed in-house at Sevensense, uses multiple visual cameras mounted on the moving robot to observe its surroundings in real-time. Fusing the image data with inertial data gathered as the robot advances creates a highly accurate 3D map of the space surrounding it. By matching the images it sees with those stored in the map, the robot can localize itself instantly anywhere within that space.

One of the key benefits of Sevensense VSLAM is that the mapping never stops. The 3D map is always up to date, reflecting even the slightest changes in the surroundings, whether they are at ground level or higher up. Moreover, it automatically integrates changes in the overall layout of its area of operation, updating the map with new details as it encounters them.

Because the process is fully automated, no human intervention is needed to transform the sensed data into the high definition map that the robots use to localize themselves, making mapping fast, easy, and highly precise. In deployments, we have found accuracies to be on the order of four millimeters, well beyond the centimeter-level accuracies required by even the most demanding applications.

Bringing Visual SLAM to the market with Alphasense Position

We brought the Sevensense 3D VSLAM technology to the market in the form of Alphasense Position, the first industrial-grade multi-camera solution for plug and play visual SLAM. Designed to bring VSLAM technology to any type of mobile ground vehicle, Alphasense Position offers full 3D mapping and pose output, enabling robots to navigate on uneven terrain, ramps, and multiple floors.

The solution delivers centimeter-level positioning accuracy even in the most challenging environments, such as large open spaces or long, featureless corridors, without requiring any external infrastructure. Designed for indoor and outdoor operation, its high-quality cameras ensure reliable performance in all lighting and weather conditions.

Alphasense Position is quick and easy to initialize and deploy, causing no downtime and requiring no costly tools. The solution uses cutting-edge algorithms to autonomously calibrate the production system and create highly accurate 3D maps, which can be shared across a fleet of vehicles to orchestrate collaboration. And using artificial intelligence, the system can constantly track changes in the environment and keep the map up to date.

Alphasense Position is available in a compact configuration, featuring five pre-calibrated cameras for fast and easy integration. Alternatively, an extended configuration with up to eight independent cameras allows for optimal system configuration.

The solution can be controlled via a dedicated web interface, a REST API, and ROS for research and development. To facilitate the development of custom navigation and path planning solutions, it supports multiple data exchange protocols including ethernet UDP and ROS for raw image and inertial sensor data transfer.

The Alphasense revolution is already underway

Vision may be the future for mobile robots, but the Alphasense revolution is already well underway. The accuracy, low cost of setup and deployment, flexibility, and adaptability enabled by Alphasense Position’s 3D VSLAM technology is already transforming automated applications in the industry.

Winning a technology challenge organized by ABB Robotics, aiming at identifying the most advanced navigation solution in the world proved the advanced stage of our Visual SLAM technology. On top of that, This success led to a partnership that will see Sevensense and the leading robot manufacturer bring a new generation of autonomous mobile robots to the shopfloor.

Working with Kyburz, an international leader in high-quality mobility and transport solutions for the last mile, we supported the development of an autonomous self-driving eTrolley, the eT4, for indoor and outdoor last-mile delivery in all weather and lighting conditions. In addition to bringing down the labor costs associated with last-mile delivery, the development of the eT4 let Kyburz gain a head start in a highly contested market.

Wetrok, who develop modern cleaning technology and play a leading role in the research and development of professional cleaning machines, designed the Robomatic Marvin, a fully autonomous and independent cleaning machine, around Alphasense Autonomy, our robot autonomy system that combines VSLAM with advanced AI local perception and navigation technology. After training the machine to clean a given area, the cleaning professionals were free to focus on more sophisticated tasks and let Robomatic Marvin scrub and dry the floor on its own.

Test Alphasense Position in less than a day

To make it easy for mobile robot developers to experience the benefits of Alphasense Position first hand, we developed an evaluation kit for both the compact and the extended product variants. The Alphasense Console, offering a user-friendly web interface that can be accessed via a desktop, laptop, or mobile device provides effortless initial setup and use.

The evaluation kits are delivered with a quick start guide, and users receive access to the Sevensense service desk to ensure a smooth testing experience, making it possible to install Alphasense Position in under an hour and test the solution in less than a day.

A huge leap forward for the mobile robotics industry

Mobile robots based on legacy positioning and navigation technologies have already proven their potential to increase productivity, replace menial labor, and streamline operations in niche settings that allow for their deployment.

Boldly embracing vision as a primary input for SLAM is a huge leap forward for the mobile robotics industry, holding the promise to help it grow out of its current niche into ever more diverse industrial, commercial, and user-facing applications.

To learn more about Alphasense Position, watch our dedicated webinar, visit the Alphasense Position product page, or order an evaluation kit today!